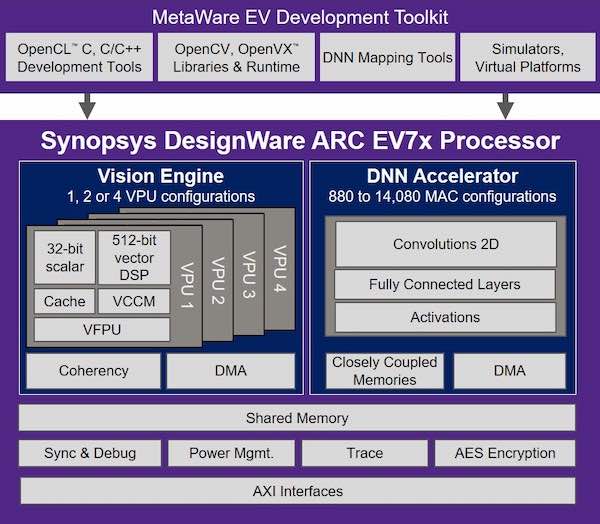

Synopsys' ARC Embedded Vision Processors Delivers Industry-Leading 35 TOPS Performance for AI | Maker Pro

Mipsology Zebra on Xilinx FPGA Beats GPUs, ASICs for ML Inference Efficiency - Embedded Computing Design

Not all TOPs are created equal. Deep Learning processor companies often… | by Forrest Iandola | Analytics Vidhya | Medium

A 0.32–128 TOPS, Scalable Multi-Chip-Module-Based Deep Neural Network Inference Accelerator With Ground-Referenced Signaling in 16 nm | Research

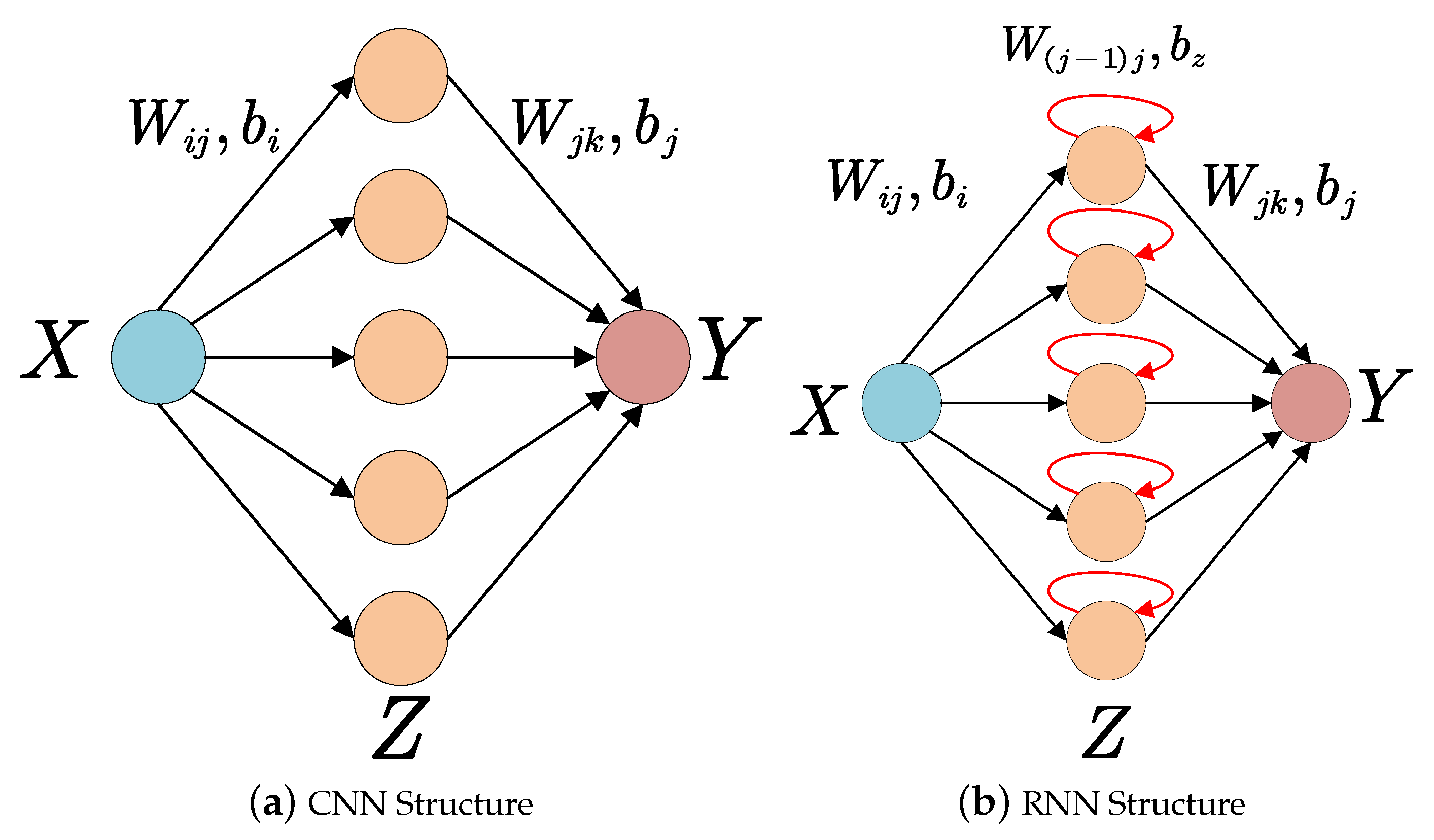

Electronics | Free Full-Text | Accelerating Neural Network Inference on FPGA-Based Platforms—A Survey

![VLSI 2018] A 4M Synapses integrated Analog ReRAM based 66.5 TOPS/W Neural- Network Processor with Cell Current Controlled Writing and Flexible Network Architecture VLSI 2018] A 4M Synapses integrated Analog ReRAM based 66.5 TOPS/W Neural- Network Processor with Cell Current Controlled Writing and Flexible Network Architecture](https://t1.daumcdn.net/cfile/tistory/993DBA3E5BAF92CE38)

VLSI 2018] A 4M Synapses integrated Analog ReRAM based 66.5 TOPS/W Neural- Network Processor with Cell Current Controlled Writing and Flexible Network Architecture

A 1.32 TOPS/W Energy Efficient Deep Neural Network Learning Processor with Direct Feedback Alignment based Heterogeneous Core Architecture | Semantic Scholar